Mind The Air-Gap: Are We Obscuring Instead of Securing?

“For every engineer who says, ‘Nobody will go to the trouble,’ there’s some kid in Finland who will go to the trouble.”

One of my favourite quotes from Kevin Mitnick’s The Art of Intrusion has stuck with me since I first read it around 12 years ago.

It resonated with me because, as engineers, we can often justify poor security decisions by thinking, ‘the chances seem low’—an easy excuse under tight project deadlines. Or we promise ourselves we’ll come back to it. But do we?

Well, if you’re reading this, it's too late. Just kidding, but if you’re reading this, you probably already know what Security through Obscurity is. It's an old-ish concept. But how does it happen, why does it happen, and how do we stop it?

What is Security through Obscurity?

We’ve all seen the tactic of securing something by simply making it more difficult to find or access.

Sure, it can technically reduces the chances of the system being breached, but it does not eliminate it. In some instances, it doesn't reduce it at all. It points to a lack of trust in your existing security measures. This is where threat actors smell blood.

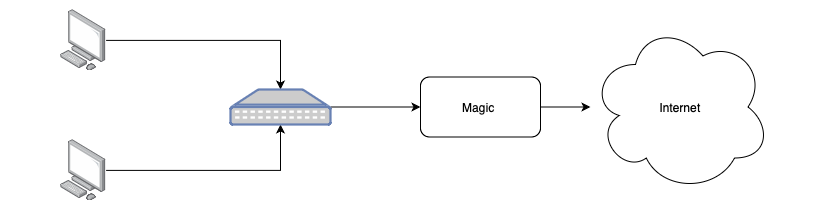

This GoldenJackal tool, which can jump to air-gapped networks, tells us that even air-gapping isn't secure anymore.

So hiding a technology doesn’t truly secure it—it just buys some time before someone exploits the vulnerability. And you’re likely going to forget about it too, unless you have a meticulous cyber assurance plan in place or fantastic memory.

What Does This Look Like in Practice?

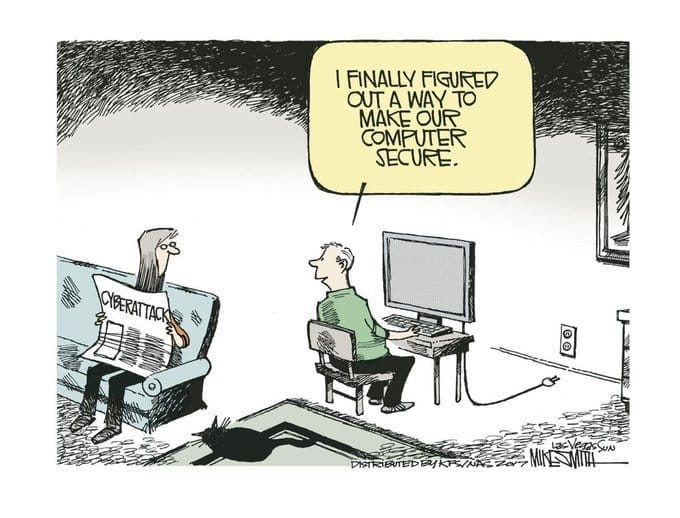

Let's just put it behind a firewall! Just putting your technology behind a firewall isn't enough.

But it happens a lot. In my infrastructure past, I've seen vulnerable servers acknowledged and moved to a different VLAN rather than remediating the actual vulnerability – as if 802.1Q tags were bulletproof! Eight times out of ten, securing is less work than obscuring.

Yes, securing services behind firewalls, restricting access to trusted subnets, and two-factor authentication (2FA) are all good practices. But when they’re the only practice, you’re in trouble. This is where you need defence in depth.

Why Does This Happen?

There are several reasons this can happen. It can range from poor understanding of the system, to design and engineering laziness, or rushed deadlines with constantly moving goalposts. Here are some common causes:

Lack of Understanding

This often occurs during a system’s lifecycle when a vulnerability arises around a lesser-known, high dependency or legacy system. Instead of risking a remediation attempt with little understanding, the system is obscured to ‘reduce’ the risk—often creating a bigger one.

Similarly, this can occur when someone designs a system without a thorough understanding of how the service will work. This leads to costly rework to include security measures that should have been foundational.

Tight Project Deadlines

Tight project deadlines often lead to corner-cutting, especially when it comes to security. Unfortunately, some of the ‘easiest’ corners to cut are the security ones. An any:any firewall rule takes 2 minutes to set up, while app-, port-, host-, service- and time-based firewall rules could take days – and they both technically achieve the same outcome.

Naturally, under pressure, project teams will opt to cut these corners and vow to go back to them – But seldom do they. Once you make this mistake on one project, you’re inadvertently setting the security standard for future projects and everyone on that project, too.

Corner Cutting

Before I start– laziness isn't entirely a bad thing, there's good and bad. I call the good kind Engineering Laziness. It's often what drives engineers to find simpler & faster solutions. Given a complex solution, we will try and find a simpler way to make it easier to manage, maintain, and fix. Automation essentially emerged from Engineering Laziness. But when laziness isn't innovating, its pure laziness.

But let's be honest – corner-cutting can be one of the main reasons we see Security through Obscurity, and it ties into the project deadlines issue. It's much easier and quicker to move a service behind a firewall, change from a non-standard port, or create an any:any rule. But it's merely a sticky-plaster approach that becomes permanent.

Security Afterthought

Frustratingly, security is often an afterthought. We’re getting better at it, but we’re not there yet. And that's why we see services created and then scramble to secure them at the end.

If you’re not considering security from the start, you’re going to have some vulnerabilities. Every service has vulnerabilities, but do you want to overlook the most obvious and most likely exploited ones by letting security be an afterthought?

Organic Growth

Not sure of the best terminology for this, but I like to call it solution creep. It starts as a single service, either as a POC or a small offering that contains little data and a small footprint, but it quickly grows to become a full offering.

The service grows to the point where it has domain admin accounts, sensitive user data, unproxied external web access, and default admin account credentials. The service becomes so important that downtime isn't acceptable, and security gets forgotten – Usually until it's too late.

Cost

Sometimes it’s not cheap to secure your stuff. You can spend millions on securing a service. Why spend millions when we can use the firewall or poorly maintained AV we have? We have backups, don't we?

Unless your business and its reputation are worth less to you than the average cost of a cyber breach at $4.88m, then go ahead! Otherwise – invest in your security technology and personnel properly!

The Causes – Conclusion

So several factors can contribute to why we see security through obscurity. There are many more – but these are the ones I feel aren’t mentioned very often. But it's not all doom and gloom – there are simple ways we can avoid these scenarios.

What Can We Do?

There are two sides to this coin: technological and behavioural practices. One of the most common causes of security vulnerabilities is us – humans. So it would be naive to address this from only a technical point of view. I'm aware the common term, ‘Defence in Depth,’ means layering security practices to protect against threats. The following practices contribute towards Defence in Depth.

Secure by Design

An obvious principle, often overpromised and under-delivered.

Build Security from the Start: Rushing to meet compliance regulations after the fact is slow, costly, and risky. Avoid the larger expense of a cyber attack by integrating security into your services from the very beginning.

Benefits: Reduces costs, delays, and risks over the long term.

Build Cyber Confidence

Do you have a system or server that you think, “If that were web-facing, we’d be in trouble!”?

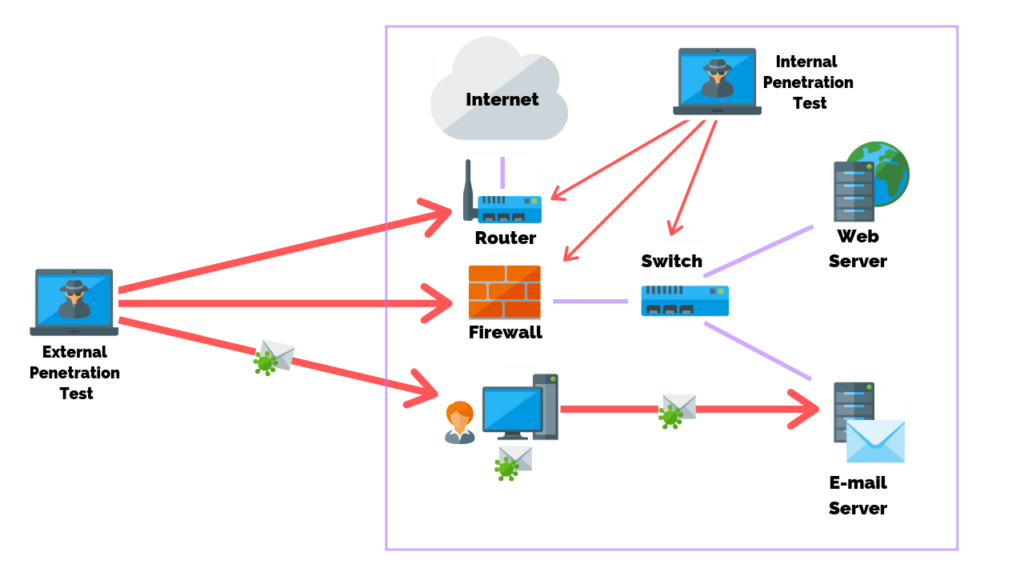

Treat All Systems as Web-Facing: Assume that threat actors are not just outside your network. Treat every service and system as if it’s exposed to the internet.

Don’t Mark Your Own Homework: Testing your own system is easy but limited by your own knowledge. Get an external perspective to uncover vulnerabilities you will likely overlook.

Proper Pen Testing: Invest in a real pen test from an accredited tester – and no, an AV scan of your servers won't cut it! Knowing your weaknesses is essential to improving security.

Protectively Monitor

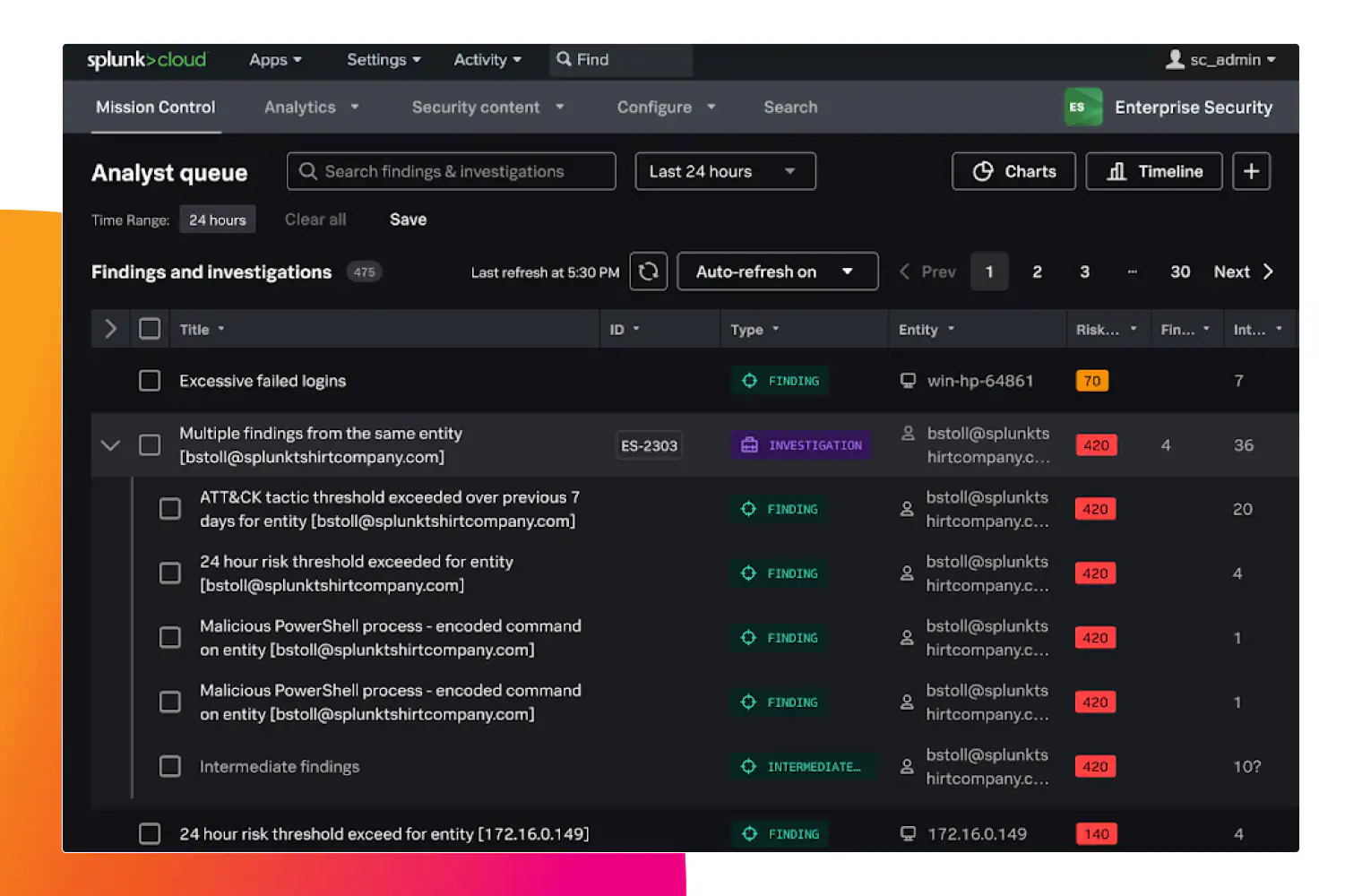

Protective Monitoring is crucial. Are you protectively monitoring? Or do you think you’re protectively monitoring?

Security through Observability: You wouldn't operate a bank without CCTV cameras. So why operate a network without any observability? Being able to see what's happening on your network is crucial for understanding those security measures that are working and those which are not.

SIEM TLC: Numerous businesses use a SIEM, but try and force security & operational logs through it by collecting everything and then just leave it. They then get alerted to everything that happens. And – trust me – engineers will set these alerts to go straight to the deleted items.

Death by alert actively puts engineers off looking at it and you're more likely to miss things. Give your SIEM some TLC and tidy it up. Ingest valuable logs only, maintain a healthy onboarding strategy and tune alerts.

Adhere to Security Boundaries

Projects often come with tight deadlines, but don’t compromise on security.

Stick to Security Principles: If a project demands tight deadlines but hasn’t already accounted for security, don’t let security take a hit. Other people’s lack of planning doesn’t result in an emergency on security’s part.

Be Transparent and Honest: Communicate the importance of security boundaries and timelines with stakeholders.

Incidents Are Inevitable

Cyber Incidents will happen. Don’t panic—prepare.

Think Beyond the Boundary: Too many security measures focus only on the perimeter. Don't assume threat actors are going to be outside of the network – they won't always be. Have measures in place once they pass the boundaries.

Layers of Security: Defence in Depth means ensuring there are hurdles beyond the perimeter—layers that protect your network, data, and reputation.

Build a Secure-First Team Culture

Engineers will inevitably handle vulnerabilities as they work — empower them to react accordingly.

Foster a Security-First Environment: Encourage engineers to take responsibility for security by cleaning up after themselves and watching out for others.

Adopt a Zero-Blame Culture: Mistakes are part of the process. A supportive culture ensures vulnerabilities are addressed without finger-pointing.

Actionable Steps: Run regular tabletop exercises and champion peer approval and collaboration to reduce solo working. Introduce 'bug-bounty' procedures motivate identification and proper escalation of issues.

Final Thoughts

Security through Obscurity does have its place - but it can't be the only thing you have in place.

By understanding where it comes from, we can start to look at changing the culture behind it.

Don't go mad though - introducing too many security measures can discourage users from following procedures. If security starts to obscure people from accessing systems – they will naturally try to circumvent our security measures!

- Murray